CRC Software Accelerator

Abstract

Today, heterogeneous computing platforms can be found in many computing systems that are geared towards improving software performance by running performance-critical functions on external hardware platform accelerators.

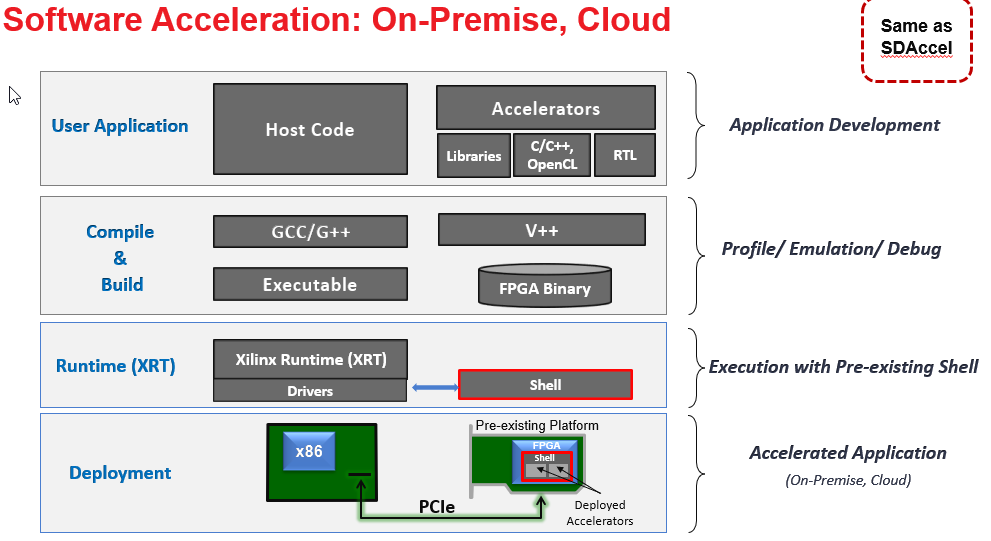

In this study a Xilinx reconfigurable hardware platform accelerator board, known as the Alveo U250, was used in conjunction with the Xilinx unified software development tool, VITIS, to perform software acceleration on a CRC16 function using the depicted tool flow below.

This top-down approach was used to create both the host and kernel code under the same development environment. While VITIS software platform can support OpenCL, C/C++, and RTL for a kernel code, this study is limited to the CRC performance comparison when implemented as OpenCL kernel, C/C++ kernel, and host application function.

This study touches on some of the high-level aspects of how and when to accelerate your software applications using kernel accelerators. There are many more options available under the new unified software tool, VITIS. For this study, the goals and areas of interest are as follows:

- Show the magnitude of the expected performance improvement of a kernel-based function vs. the same function running as part of the host code.

- In theory OpenCL would be more portable with less optimization possible compared to using C/C++ with HLS. Find out if there is any significant performance advantage to implementing an OpenCL kernel versus a C/C++ kernel?

- Determine if there is a case where the use of the kernel accelerators may not always result in better performance due to the latency overhead involved with launching the kernel and getting that first block of data to the kernel and back. Will there be a point where the smaller data block size sent to the kernel function result in lower performance compared to the same function running in the host code? If yes, where is that threshold?

Setup Information

Server xbutil scan information for server name xnhalveosys

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

System Configuration

Sysname: Linux

Release: 4.4.0-138-generic

Version: #164-Ubuntu SMP Tue Oct 2 17:16:02 UTC 2018

Machine: x86_64

Glibc: 2.23

Distribution: Ubuntu 16.04.5 LTS

Now: Tue Sep 24 14:13:11 2019

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

XRT

Version: 2.2.2158

Git Hash: ab0d3e66d0244d65b520f1abf15446739d46acdb

Git Branch: 2019.1

Build Date: 2019-05-24 11:40:38

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Shell: xilinx_u250_qdma_201910_1

FPGA: xcu250-figd2104-2L-e

IDCode: x4b57093

Vendor Device SubDevice SubVendor

0x10ee 0x5015 0xe 0x10ee

DDR size DDR count Clock0 Clock1 Clock2

49152 MB 3 300 500 0

PCIe DMA chan(bidir) MIG Calibrated P2P Enabled

GEN 3x16 2 true false

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

DMA Transfer Metrics

Chan[0].h2c: 452 MB

Chan[0].c2h: 30379 KB

Chan[1].h2c: 0 Byte

Chan[1].c2h: 0 Byte

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Software Development Environment

Xilinx VITIS Development Environment:

VITIS v2019.1 (64-bit)

SW Build 2552052 on Fri May 24 14:48:04 MDT 2019

Hardware Accelerator, Alveo U250

Vitis Project Background

Three projects using the Vitis tool were implemented to run the CRC function in the host code and kernel code. Each of the project calls a time function to measure the time it took to complete the CRC function in the host code and kernel code. Included in the time measured for the kernel code is the time it took to launch the kernel + time to transfer the block of data from the host DDR memory to the kernel directly or indirectly through the kernel DDR memory + time to process CRC calculation + time to transfer the CRC result back (via the kernel DDR memory) to the host DDR memory.

For the purpose of clearly identifying where and how the CRC functions were running, four versions of the same CRC functions, implemented in the three projects, are being labelled as Host CRC, OCL-Kernel CRC, C-Kernel CRC, and C-Kernel-ST CRC. For definition, ST is equivalent to saying Host to Kernel (H2C) streaming, and OCL is the acronym for OpenCL.

The CRC function was selected as the function of choice because it is simple and commonly known, especially by software developers. There are several public domain resources from which to obtain a complete CRC code. One with a copyright belonging to Michael Barr was selected for this study. This code is setup to perform three different types of CRC calculations coded for a slower bit-by-bit algorithm, and a second one coded for a faster look-up table algorithm. From these choices, the CRC16-ARC type using the slower algorithm was chosen for this study.

The three projects named below indicates which CRC it’s implementing. The details of these projects and their resulting performances are described in subsequent sections.

- Crc16 – Host CRC and OCL-Kernel CRC.

- Crc16_c - Host CRC and C-Kernel CRC.

- Crc16_c_st - Host CRC and C-Kernel-ST CRC.

Host CRC vs. OCL-Kernel CRC (Memory Mapped H2C and C2H)

For this project, named CRC16, the kernel is coded using OpenCL constructs. In the host code, user input is requested to select the number of bytes of an initialized block of data to send to the Host CRC and the C-Kernel CRC. The same content is sent to both CRC functions. The returned CRC values are compared to verify they match. The valid options for block sizes are 8, 16, 512, 1K, 64K, and 1M bytes. After the selection the data block moves around in the following sequence:

- 8-bit data from Host DDR4 into Host CRC

- 16-bit CRC result from Host CRC back to Host DDR4

- 8-bit data from Host DDR4 to the Kernel DDR4

- 8-bit data from Kernel DDR4 into C-Kernel CRC

- 16-bit CRC result from C-Kernel CRC back to Kernel DDR4

- 16-bit CRC from Kernel DDR4 back to Host DDR4

A timer function is used to measure the time to complete the Host CRC and the OCL-Kernel CRC functions. See the result below.

Clearly from this result, at 1M byte, the OCL-Kernel CRC has a much higher performance than the Host CRC. See below

This zoomed-in graph shows the cross-over point to be just above 1K bytes whereby the overhead built into the construct of the OCL-Kernel CRC results in the performance of the Host CRC block to be better. However, at the 64K byte mark, the performance of the OCL-Kernel CRC is significantly better. See below

Host CRC exhibits a much higher gradient between 1KB and 1MB compared to OCL-Kernel CRC. See below

Host CRC vs. C-Kernel CRC (Memory Mapped H2C and C2H)

For this project, named CRC16_C, the kernel is coded using a C/C++ constructs, and the sequence of the data flow is the same as the previous project, CRC16. In the host code, user input is requested to select the number of bytes of an initialized block of data to send to the Host CRC and the C-Kernel CRC. The same content is sent to both CRC functions. The returned CRC values are compared to verify they match. The valid options for block sizes are 8, 16, 512, 1K, 64K, and 1M bytes. After the selection the data block moves around in the following sequence:

- 8-bit data from Host DDR4 into Host CRC

- 16-bit CRC result from Host CRC back to Host DDR4

- 8-bit data from Host DDR4 to the Kernel DDR4

- 8-bit data from Kernel DDR4 into C-Kernel CRC

- 16-bit CRC result from C-Kernel CRC back to Kernel DDR4

- 16-bit CRC from Kernel DDR4 back to Host DDR4

A timer function is used to measure the time to complete the Host CRC and the C-Kernel CRC functions. See the result below.

The results for this case look to be very similar to the previous case with the OCL-Kernel CRC

Host CRC vs. C-Kernel-ST CRC (Streaming H2C and Memory Mapped C2H)

For this project, named CRC16_C_ST, the kernel is coded using C/C++ constructs, and is the same as the CR16_C project except here the data block is streamed from the host DDR memory into the kernel. In the host code, user input is requested to select the number of bytes of an initialized block of data to send to the Host CRC and the C-Kernel-ST CRC. The same content is sent to both CRC functions. The returned CRC values are compared to verify they match. The valid options for block sizes are 8, 16, 512, 1K, 64K, and 1M bytes. After the selection the data block moves around in the following sequence

- 8-bit data from Host DDR4 into Host CRC

- 16-bit CRC result from Host CRC back to Host DDR4

- 8-bit data from Host DDR4 into C-Kernel CRC

- 16-bit CRC result from C-Kernel CRC to Kernel DDR4

- 16-bit CRC from Kernel DDR4 back to Host DDR4

A timer function is used to measure the time to complete the Host CRC and the C-Kernel-ST CRC functions. See the result below.

Again, the result for this kernel CRC is similar to the other kernel CRC.

Average CRC Calculation Times

This section tabulates the average time for the CRC functions. For the kernel CRCs, the average was calculated from 5 timed measurements, while the Host CRC average was calculated from 15 timed measurements.

The Host CRC is the baseline and is non-accelerated, while the other three CRC functions are hardware accelerated as kernels on the Alveo U250.

Summary Performance Plots (Host CRC, OCL-Kernel CRC, C-Kernel CRC, C-Kernel-ST CRC)

Conclusions

The CRC code for the Host CRC and Kernel CRC are essentially the same. The differences are in the implemented latency and whether the kernel code is implemented with OpenCL or C/C++. This study performs the measurement of the CRC function only on the first time each function is called. Subsequent calls to the function in kernel could result in better performance because the kernel would’ve already been loaded

Overall, the performance of the three different types of kernel CRC is relatively flatter and more linear across the data block sizes from 8 bytes through 1M bytes compared to the host CRC. From 8 to roughly 8K bytes, the Host CRC performed better while above 8K, the kernel CRCs performed better, especially at 64K and 1M bytes where kernel CRC took only 12% and 2% respectively of the Host CRC time to perform the CRC calculation. Conversely, at the 8, 16, 512, and 1K bytes, the Host CRC took only 0.5%, 0.5%, 8%, and 16% respectively of the kernel CRC’s time. Clearly, accelerating a software function in a hardware accelerator makes more sense with a larger data block size. For smaller data block size, its better to use the host processor to run the function. The cross-over point will vary with the function being accelerated along with the implemented acceleration technique.

When comparing the performance of just the OCL-Kernel CRC, C-Kernel CRC, and C-Kernel-ST CRC, they have similar performance with a notable disadvantage by OCL-Kernel CRC at 1MB. More data collection using larger data block size is needed to determine if the divergence in performance continues or not.

The more surprising result is that the performance between C-Kernel CRC and C-Kernel-ST CRC ended up being very similar even at the 1M byte block size. It was expected that streaming data blocks directly from host memory to the kernel would’ve reduced the latency of the data movement and would result in higher performance. However, that’s not the case, and further investigation is needed to understand this.

Further improvement in the CRC function throughput and in the latency of the data movement is possible. Compared to the OpenCL kernel, more options are available in the C/C++ and RTL kernel implementation. Specifically, in this study, all of the data blocks are being moved and processed 8 bits at a time, but the Alveo platform can support the movement of data blocks up to 512-bit wide and can concurrently process multiple pieces of data that may be packed within the 512-bit wide bus. For more ideas and examples on how to improve throughput and latency, go to this Xilinx GitHub https://github.com/Xilinx/SDAccel_Examples .

About Luke Rengpraphun

As an FAE in the Northeast region, Luke Rengpraphun is interested in the embedded, acceleration, and ML applications along with development tools that enables hardware, software, and system developers to take advantage of these types of solutions. Prior to joining AMD in October 2018, Luke was always involved with programmable solutions while developing products for the military, test, telecom, and industrial markets, and while serving customers in his FAE role at Altera (Intel PSG), Arrow, and Achronix. In his free time, Luke enjoys traveling, sharing tasty foods, and making new connections.