Whole Graph Optimizer (WeGO)

Introduction

Whole Graph Optimizer (WeGO) has been released in Vitis AI™ v2.0, aiming to offer a smooth solution to deploy TensorFlow 1.x models on cloud DPU by integrating Vitis AI Development kit with TensorFlow framework.

WeGO is a straightforward inference solution that balances the ease-of-use and optimal performance by maximumly reusing the Python code (including pre-processing and post-processing) developed during the phase of models training, which greatly speeds up the models’ deployment and evaluation over cloud DPU.

Workflow

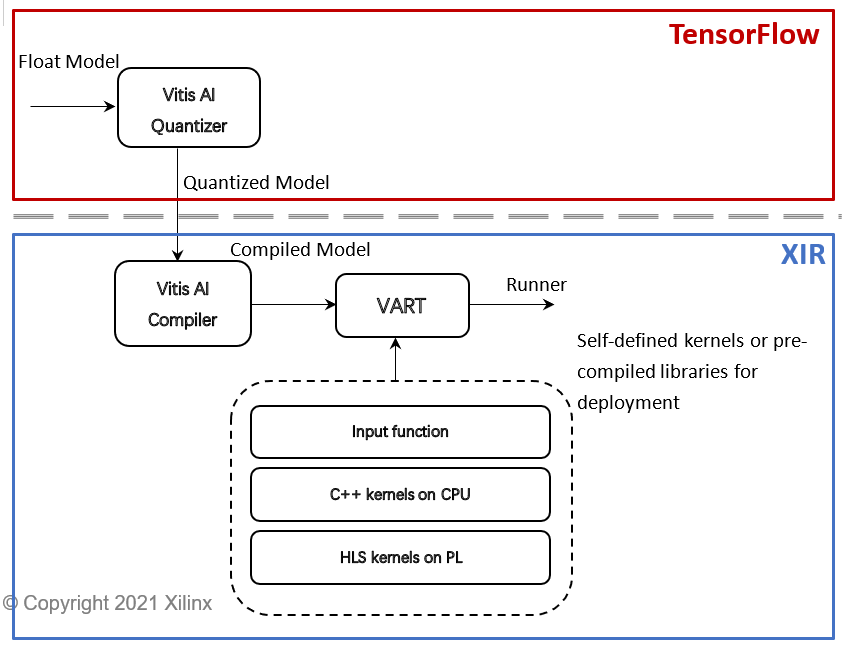

The traditional Vitis AI workflow is more focused on performance, which leverages the Vitis AI compiler to parse the model from the original framework to Xilinx intermediate representation for deep optimization. After compilation, the user needs to re-write the codes with C++ or Python for the unsupported OP or utilize the Graph Runner within AI Library for inference.

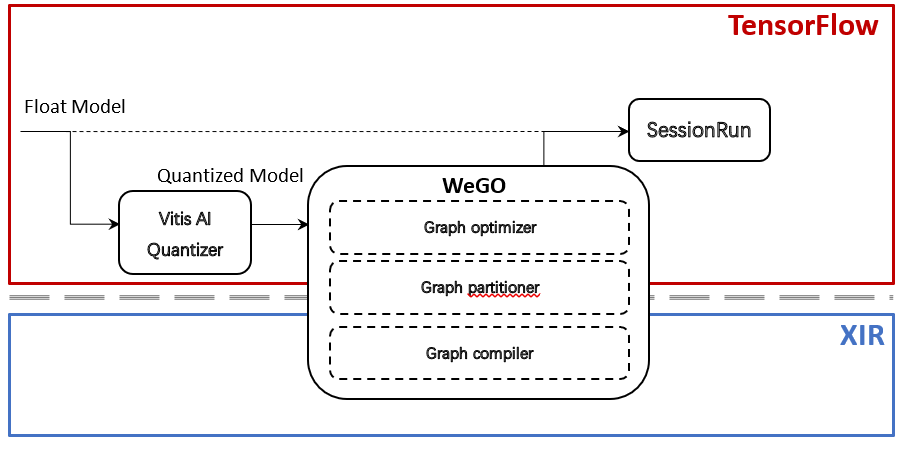

In Vitis AI 2.0, WeGO flow still takes the quantized graph as input. The create_wego_graph() API will do the partition first, then push the DPU subgraphs to the VAI_C compiler and VART to create DPU runners for acceleration. The TensorFlow session.run() API schedules the whole graph execution afterward on either CPU or DPU.

Discussion

WeGO also known as framework inference will benefit the users at the model selection or development stage. After training, there will be little overheads to quickly get an overall concept about the inference result, like the minimum performance we could expect, or DPU support status. The quick feedback will shorten the model development cycle and dig out the performance bottleneck at an early stage. After the model development, users can further optimize the performance with Vitis AI workflow in a more fine-grained way.

Now, WeGO can only support TensorFlow 1.x at Vitis AI 2.0, and has TensorFlow 2.x and PyTorch support on the roadmap. The benchmark of two examples (Resnet50 and Yolov3) are shown in the table below.

Model |

Resnet50 |

Yolov3 |

Workload (GB) |

6.97 |

65.63 |

Input Resolution |

224x224 |

416x416 |

Total Images Running |

52800 |

19200 |

Total Time (s) |

12.939 |

42.862 |

FPS |

4080.79 |

447.95 |

Table 1: Benchmark of WeGO examples with Intel(R) Xeon(R) Bronze 3104 CPU @ 1.70GHz

Visit the following GitHub link for more detailed information and step-by-step instructions to run the demo on the Xilinx VCK5000 Production board.

Conclusion

WeGO, as a newly released Data Center AI inference workflow, is an outstanding complementary to the current Vitis AI solution. It minimizes the deployment efforts by reusing the codes during the training phase to gain early feedback of the inference result.

It will benefit the users from three major aspects as below:

Ease of Use:

- Straightforward for the users and extremely smooth transition from training to inference.

- Leverage framework native support to deploy DPU un-supported operators to CPU with minimal effort

- Graph partition, quantization, and compilation automatically take place within frameworks

- Useful to deploy the user-defined AI models, in which the DPU un-supported subgraphs fall back to the native framework for execution.

Performance:

- Achieve great end to end performance – close to native Vitis AI

- Further improve performance for CPU subgraph with the integration with AMD ZenDNN version TensorFlow and PyTorch

Adaptive AI solution for data center

- Quickly evolve with TensorFlow and PyTorch open-source community

- Unify AI inference software solution with AMD for data center AI

WeGO in Vitis AI 2.0 supports limited TensorFlow 1.15 models. It will add support for TensorFlow 2 and PyTorch in the next release. Stay tuned!

About Fan Zhang

Dr. Fan Zhang serves as an AI Specialist in AMD Tech Marketing Department and is responsible for the innovation and promotion of AMD AI solution to differentiate vertical market worldwide. Before joinig AMD in July 2018, Dr. Zhang served as tech leads of Deephi business department. Before that, Dr. Zhang was the core R&D researcher in an oversee company and has nearly ten years of experiences of project development. Fan Zhang has completed his Ph.D and M.Sc degree of EE in the University of Nottingham.

See all of Fan Zhang's articles